In the same section

-

Share this page

Immersive Visual Media

The LISA-VR image synthesis research unit focuses on the end-to-end processing pipeline for Virtual and eXtended Reality (VR-XR). The scene is captured from multiple fixed viewpoints using a variety of RGB and/or RGB+depth capturing devices (left), and the data is then filtered and compressed into MPEG-immersive format (middle) to allow any virtual viewpoint to the scene to be synthesized at the client side (right). The rendering can be stereoscopic and/or holographic. For real-time processing, especially the server side (left-middle) requires huge processing embodied by multi-GPU servers. The client side (right) typically covers single-GPU processing using OpenGL, Vulkan and/or CUDA.

3DLicorneA, Innoviris-Brussels grant agreement no. 2015-DS-39a/b & 2015-R-39c/d, focused mainly on the multi-camera acquisition and rendering, and developed the Reference View Synthesizer (RVS) used in the MPEG Immersive Video (MIV) standardization activities. The MIV file format supports high compression gains over conventional simulcast approaches that would compress each camera view separately.

The bottom animation in the image aside shows a MIV bullet time navigation that Vimmerse prepared on our open access ChocoFountainBxl multi-camera sequence.

HoviTron, European Union’s Horizon 2020 research and innovation programme grant agreement no. 951989, goes one step beyond 3DLicorneA by providing all processing steps (without compression) in real-time using depth sensing devices (that nevertheless need heavy depth filtering). It targets tele-operation applications, where the scene is visually teleported towards the user through his/her headset. Each head pose triggers the synthesis of the corresponding virtual viewpoint to the scene (bottom of the figure).

HoviTron also provides a true holographic visualization of the scene using CREAL’s holographic headset. The teleoperator not only sees the scene from any viewpoint, but additionally experiences true eye accommodation on each object in the scene, as if he/she would be physically standing in front of it.

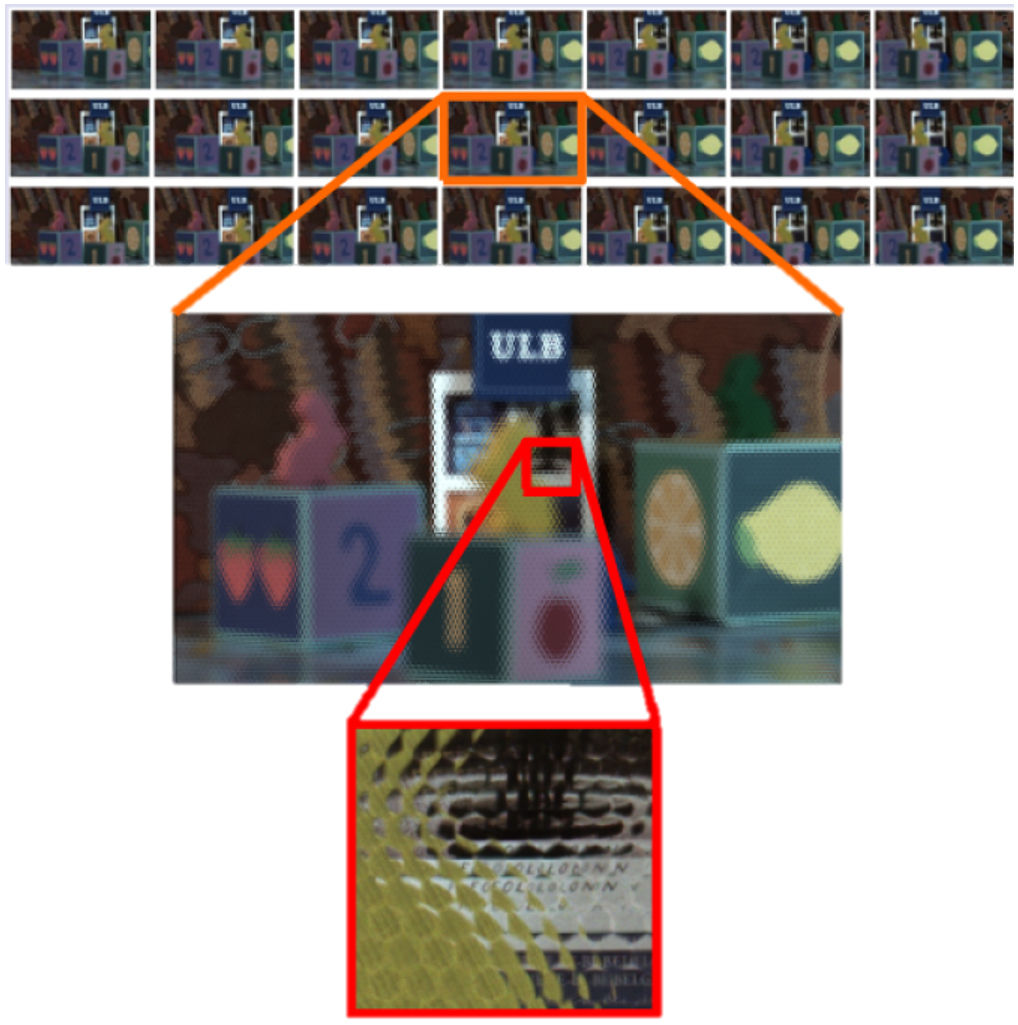

The research on Plenoptic cameras is funded by Emile DEFAY grant agreement no. 4R00H000236, Belgium, and HoviTron, EU Horizon 2020 grant agreement no. 951989. A Plenoptic camera (e.g. RayTrix) captures in one shot hundreds of tiny images thanks to a Lenslet objective (an objective composed of hundreds of micro lenses). We have started with developing a calibration method (MMSP2021), and a tool (Reference Plenotpic Virtual camera Calibrator (RPVC)) needed to estimate the optical parameters of plenoptic camera arrays. This project also explores different methodologies (IC3D2021) and provides a tool (Reference Lenslet Convertor (RLC)) for conversion from Lenslet image to multiview images. From this research we have provided a dataset that consists of 7x3 viewpoints. The outcome of the project contributes to the international MPEG standardization for Lenslet Video Coding (LVC).

The research on Tensor Displays is funded by the FER 2021 project, grant agreement no. 1060H000066-FAISAN, Belgium. One crucial tool in the 3D video research field is a 3D video display or Light Field Display where the viewer can sense the depth without wearing special glasses, such as the tensor display. Such a display consists of several transparent Liquid Crystal Display layers (LCDs) stacked on in parallel, with a white back light behind all the LCD layers. Each layer of LCD emits a colour pattern. From a viewer perspective, the linear combination of the patterns of each layer forms an image which corresponds to its location, as shown in the image aside. In this research, we explore the limitations of existing technologies for visualization of non-Lambertian objects on a tensor display for virtual reality applications. Moreover, a prototype display has also been developed and currently being extended to address the new challenges. Finally, we aim to create an end-to-end system from plenoptic camera to tensor display (without compression yet).

The COLiBR²IH project, grant agreement no. CDR J.0096.19 from Fonds de la Recherche Scientifique (FRS-FNRS), Belgium, has shown that it is possible to reproduce holograms from laser-free acquisition using conventional cameras. Various acquisition and synthesis methods have been compared, showing that as little as four RGBD images captured from the scene can faithfully reproduce all the holographic viewpoints, as demonstrated in the video at the left for the Blender Classroom scene. We show in our overview paper on view synthesis using the MPEG-I rendering framework that our Reference View Synthesizer (RVS) often outperforms recently published deep neural network based view synthesis, without the need for scene-dependent and long training phases.

Contacts

Support

- Innoviris/Région Bruxelloise

- FEDER

- FRIA/FNRS

- European Regional Development Fund

- Service Public de Wallonie Recherche

- ARC (Fédération Wallonnie - Bruxelles)

- BELSPO

- EU-H2020

- Emile DEFAY